The overall aim of the project is to develop a method to integrate these fields, using cutting edge machine learning techniques, with the goal of getting a much more complete picture of the development of the text and language of the Hebrew Bible than is currently possible.

By Martijn Naaijer

Faculty of Theology

University of Copenhagen

By Anders Søgaard

Department of Computer Science

University of Copenhagen

By Martin Ehrensvärd

Faculty of Theology

University of Copenhagen

March 2022

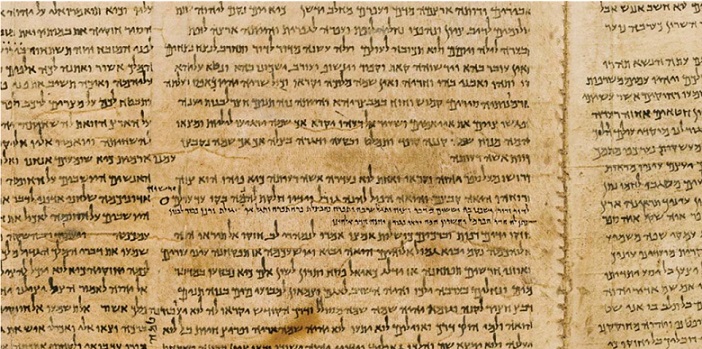

The Great Isaiah Scroll from Qumran

Abstract

Over recent decades it has become clear that the Hebrew biblical texts kept growing and developing to a remarkable extent even in relatively late times. A better understanding of this process is crucial in interpreting the texts. In this two-year collaboration between the Faculty of Theology and the Department of Computer Science at the University of Copenhagen, Denmark, we will perform a machine learning-based investigation of the textual and linguistic history of the Hebrew Bible, based on the biblical texts found among the Dead Sea Scrolls. No adequate method exists to achieve this, so we will develop one, using Machine Learning techniques, a subfield of Artificial Intelligence, in which important advances have been made during the last decade.

Artificial intelligence

Artificial intelligence has become a lot more intelligent over the past decade. This is evident in e.g., Google searches, or in Google Translate. It’s also observable in our smartphones. Developments happen incrementally and continuously and so they sneak up on you, but if you compare today’s phones and services to those of ten years ago, it’s an astounding development. What we are doing in this project also would not have been possible until very recently.

Great improvements have happened on three fronts to make this possible. Firstly, new methods in the field of Machine Learning have proved to be very effective. Secondly, hardware development has reached a stage where massive calculations are widely available, without having to wait long for just one calculation to finish. They can even be done for free on e.g., Google’s servers. Thirdly, and most importantly, repositories of digitized ancient texts have grown immensely, and many are available for free online, e.g., GitHub.

Artificial intelligence is a rather broad term. A very important, concrete form of artificial intelligence is machine learning, which is what we are working with in this project. And using machines we can now analyze linguistic expressions that are both complex and common. We can create a hitherto unmatched overview of the material, and we can (in principle) do so with less human bias than was possible before. Semitic philologists and linguists have traditionally tried to absorb as many linguistic data as humanly possible. Through this they developed their intuition and this helped them to reconstruct the history of the languages and to understand new texts when they came to light – significant ancient Semitic texts come to light every year. All this is good and well. But humans have their limitations.

NLP

Natural Language Processing (NLP) seeks to understand and to generate natural language. It was founded already in the 1950s, and optimism was great when an IBM mainframe computer as proof of concept translated a number of Russian sentences into English in January 1954 in what is known as the Georgetown–IBM experiment. It turned out to be a very, very hard problem to solve properly, however. If you have used Google Translate in the past, you will know just how poor of a translator Google was (compared to skilled humans) until fairly recently. However, if you haven’t used Google Translate during the past five years or so, you will be astonished at how much better it works nowadays. It now will produce sentence after sentence of often good English, Danish, French etc., and often – although by no means always – correctly translated. New models and techniques, such as Deep Learning (using e.g., LSTM models or Transformers like BERT and GPT2) have been crucial in this development, see e.g., Raaijmakers 2022. In this project these techniques will be applied to the study of Ancient Hebrew. We will furthermore receive crucial help from the field of bioinformatics where massive genetic strings not unlike natural language are analyzed (see e.g., Balaban et al. 2019).

The problem of Hebrew

The Hebrew Bible is a foundational text for three world religions, but its early development remains surrounded with riddles. The development of the text is studied in textual criticism, and the development of the language in historical linguistics. These are mostly distinct fields of research, but for a complete picture of the history of the Bible – because we often possess some or many different versions of the same Hebrew or Aramaic text – they need to be integrated. The overall aim of the project is to develop a method to integrate these fields, using cutting edge machine learning techniques, with the goal of getting a much more complete picture of the development of the text and language of the Hebrew Bible than is currently possible.

The Hebrew Bible is long-history literature, in the sense that the biblical texts have had a long history of composition, editing, and transmission. The oldest surviving manuscripts are the versions of the biblical books used by the sect that produced the Dead Sea Scrolls, but the texts had already been passed down for centuries before that. In this process, the texts continuously grew and changed. We know that many variations cropped up during the transmission of the text, as texts often were copied imperfectly.

Many scholars acknowledge that the history of the biblical texts and the history of biblical Hebrew are related problems, and that you cannot meaningfully study one without the other. However, in practice, textual criticism and historical linguistics are still two distinct areas of research. Most studies on the history of biblical Hebrew are based on one medieval manuscript, Codex Leningradensis (L, dated to 1008-1009 CE). Language scholars using this manuscript as their main source argue that its language has been preserved well over many centuries (Hendel and Joosten 2018, 49; Rezetko and Young 2014, 160).

But the consensus among textual scholars is that the textual tradition showcased in L simply is one of many traditions and that it should not be given the prominent place assigned to it by language scholars (Tov 2012, 11-12), because textual variation is more random than often supposed. This is a point of great contention in the field. The work of Rezetko, Young and Ehrensvärd was instrumental in getting the field to grapple with this problem (Young et al. 2008).

By investigating how the texts of manuscripts cluster and how (in)consistent language variation is between and within manuscripts, a much better understanding of the dynamics of textual transmission and language history will be obtained.

The team

Our research team is interdisciplinary and includes Martin Ehrensvärd, a specialist in Hebrew, Anders Søgaard, a specialist in machine learning, and Martijn Naaijer whose recently finished PhD bridges these two fields (Naaijer 2020).

Research questions

The overarching question that we ask ourselves is this: How can machine learning combined with sequence-analysis techniques developed in bioinformatics be applied as an analytical tool for studying the biblical Dead Sea Scrolls and contribute to (1) the study of textual fluidity and stability of the biblical texts, and (2) the description of the history of biblical Hebrew?

Research objectives

The research has two main objectives. The first objective is to establish the research methodology for analyzing textual fluidity and linguistic variation. No adequate method exists to achieve this, so we will develop one, using Graph Neural Networks. The second objective is to develop and apply the methodology so as to broadly analyze textual and linguistic traditions, potentially applicable to other ancient languages.

Bibliography

Balaban, M., Moshiri, N., Mai, U., Jia, X., Mirarab, S., ‘TreeCluster, Clustering Biological Sequences Using Phylogenetic Trees’, PloS ONE 14 (8), 2019.

Hendel, R., Joosten, J., How Old is the Hebrew Bible: A Linguistic, Textual, and Historical Study, New Haven: Yale University Press, 2018.

Naaijer, M., Clause Structure Variation in Biblical Hebrew: A Quantitative Approach, PhD dissertation, Vrije Universiteit Amsterdam, 2020.

Raaijmakers, S., Deep Learning for Natural Language Processing, Shelter Island, NY: Manning, 2022.

Rezetko, R., Young, I., Historical Linguistics and Biblical Hebrew: Steps Toward an Integrated Approach, Atlanta: SBL Press, 2014.

Tov, E., Textual Criticism of the Hebrew Bible, Minneapolis: Fortress, 2012, third edition.

Young, I., Rezetko, R., and Ehrensvärd, M., Linguistic Dating of Biblical Texts, two volumes, London: Equinox, 2008.

Article Comments

As Hebrews words seem to…

As Hebrews words seem to have several different meaning (polysemous), does "textual Fluidity" include puns and metaphors.

I have an M.Div. and have…

I have an M.Div. and have studied both greek and hebrew language and exegesis. I am interested in the use of AI for biblical and theological study. I am already using this technology in my language studies, translation, and interpretation along with various implications. I will be retiring soon and would like to be involved in this process of exploring the biblical text with AI. How would I get stated>

Hello, thanks for the fascinating read.

I'm a Brazilian NT PhD student at McMaster (Canada) and I'd appreciate your help finding bibliography on applying Deep Learning to create a new Critical Edition of the New Testament (such as the NA28 or UBS5 eclectic texts). Is anyone currently working on that?

Thank you for your time.